Traditional computer

Academician Tao Ruibao of Fudan University said that if it is not fundamentally changed, the development speed of traditional computers will become slower and slower. After 10-15 years, it will be completely stagnant. . This conclusion was approved by the academicians.

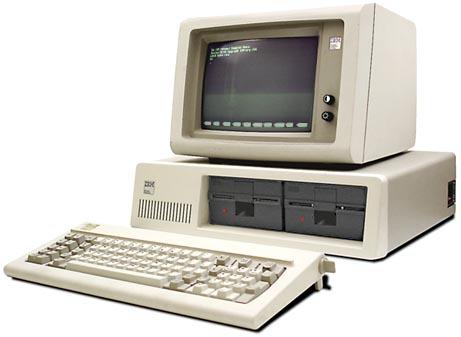

Introduction

A machine system that receives and stores information according to human requirements, automatically processes and calculates data, and outputs result information. Traditional computer is the extension and expansion of brain power, and it is one of the major achievements of modern science.

Traditional computer systems are composed of hardware (sub)systems and software (sub)systems. The former is an organic combination of various physical components based on the principles of electricity, magnetism, light, and machinery, and is the entity on which the system works. The latter are various procedures and documents used to direct the entire system to work according to specified requirements.

Since the first electronic traditional computer came out in 1946, traditional computer technology has made amazing progress in terms of components, hardware system structure, software system, and applications. Modern traditional computer systems range from micro-traditional computers and personal traditional computers to large-scale traditional computers and their networks. They have various forms and characteristics. They have been widely used in scientific computing, transaction processing, and process control, and are becoming increasingly popular in various fields of society. The progress of society has a profound impact.

Electronic traditional computers are divided into two categories: digital and analog. Generally speaking, traditional computers refer to digital traditional computers, and the data processed by their operations are represented by discrete digital quantities. The data that simulates traditional computer operations is represented by continuous analog quantities. Compared with digital machines, analog machines are fast, have simple interfaces with physical devices, but have low accuracy, difficult to use, poor stability and reliability, and expensive. Therefore, the simulator has become obsolete, and it is only used in occasions that require fast response but low accuracy. The hybrid traditional computer that combines the advantages of the two ingeniously still has a certain vitality.

Features

Traditional computer systems are characterized by accurate and fast calculations and judgments, good versatility, easy use, and can be connected to a network. ①Calculation: Almost all complex calculations can be realized by traditional computers through arithmetic and logical operations. ② Judgment: Traditional computers have the ability to distinguish different situations and choose different processing, so they can be used in management, control, confrontation, decision-making, reasoning and other fields. ③Storage: Traditional computers can store huge amounts of information. ④Accurate: As long as the word length is sufficient, the calculation accuracy is theoretically unlimited. ⑤Fast: The time required for one operation of a traditional computer is as small as nanoseconds. ⑥General: The traditional computer is programmable, and different programs can realize different applications. ⑦Easy to use: Abundant high-performance software and intelligent man-machine interface greatly facilitate the use. ⑧Networking: Multiple traditional computer systems can transcend geographic boundaries and share remote information and software resources with the help of communication networks.

Composition

Figure 1 shows the hierarchical structure of a traditional computer system. The kernel is a hardware system, an actual physical device for information processing. The outermost layer is the people who use traditional computers, that is, users. The interface between human and hardware system is software system, which can be roughly divided into three layers: system software, support software and application software.

Hardware

The hardware system is mainly composed of central processing unit, memory, input and output control system and various external devices. The central processing unit is the main component for high-speed computing and processing of information, and its processing speed can reach hundreds of millions of operations per second. Memory is used to store programs, data and files. It is often composed of fast main memory (capacity up to hundreds of megabytes, or even gigabytes) and slow mass auxiliary memory (capacity up to tens of gigabytes or more than hundreds of gigabytes). ) Composition. Various input and output external devices are information converters between humans and machines, and the input-output control system manages the information exchange between the external devices and the main memory (central processing unit).

Software

The innermost layer of the software system is the system software, which consists of an operating system, utility programs, and compilers. The operating system implements management and control of various software and hardware resources. Utility programs are designed for the convenience of users, such as text editing. The function of the compiler is to translate the program written by the user in assembly language or a certain high-level language into a machine language program executable by the machine. Supporting software includes interface software, tool software, environmental database, etc., which can support the environment of the machine and provide software development tools. Supporting software can also be considered as part of the system software. Application software is a special program written by users according to their needs. It runs with the help of system software and supporting software, and is the outermost layer of the software system. Software can be divided into two types: system software and application software

Classification

Traditional computer systems can be classified according to system functions, performance or architecture. ① Special purpose computer and general purpose computer: The early traditional computers were designed for specific purposes and were of special nature. Since the 1960s, it has begun to manufacture general-purpose traditional computers that take into account the three applications of scientific computing, transaction processing and process control. Especially the emergence of serial machines, the adoption of various high-level programming languages of standard texts, and the maturity of the operating system enable a model series to choose different software and hardware configurations to meet the different needs of users in various industries, and further strengthen Versatility. However, special purpose machines are still being developed, such as full digital simulators for continuous dynamics systems, ultra-mini space special traditional computers, and so on.

② Supercomputers, mainframes, medium-sized computers, minicomputers, and microcomputers: Traditional computers are developed based on large and medium-sized computers as the main line. Small-scale traditional computers appeared in the late 1960s and micro-traditional computers appeared in the early 1970s. They are widely used because of their light weight, low price, strong functions, and high reliability. In the 1970s, huge traditional computers capable of computing more than 50 million times per second began to appear, and they were specially used to solve major issues in science and technology, national defense, and economic development. Giant, large, medium, small, and microcomputers, as the echelon components of traditional computer systems, have their own uses and are developing rapidly.

③ Pipeline processor and parallel processor: Under the condition of limited speed of components and devices, starting from the system structure and organization to achieve high-speed processing capabilities, these two processors have been successfully developed. They all face ɑiθbi=ci(i=1, 2, 3,...,< i>n; θ is an operator) such a set of data (also called vector) operations. The pipeline processor is a single instruction data stream (SISD). They use the principle of overlap to process the elements of the vector in a pipeline manner, and have a high processing rate. The parallel processor is a single instruction stream multiple data stream (SIMD), which uses the principle of parallelism to repeatedly set up multiple processing components, and simultaneously process the elements of the vector in parallel to obtain high speed (see parallel processing traditional computer systems). Pipeline and parallel technology can also be combined, such as repeatedly setting multiple pipeline components to work in parallel to obtain higher performance. Research on parallel algorithms is the key to the efficiency of such processors. Correspondingly expand vector statements in high-level programming languages, which can effectively organize vector operations; or set up vector recognizers to automatically recognize vector components in source programs.

An ordinary host (scalar machine) is equipped with an array processor (only for high-speed vector operation pipeline dedicated machine) to form the main and auxiliary machine system, which can greatly improve the processing capacity of the system, and the performance and price The ratio is high, and the application is quite wide.

④ Multiprocessors and multicomputer systems, distributed processing systems and traditional computer networks: Multiprocessors and multicomputer systems are the only way to further develop parallel technology, and are the main development directions for giant and mainframe computers. They are multiple instruction streams and multiple data streams (MIMD) systems. Each machine processes its own instruction stream (process), communicates with each other, and jointly solves large-scale problems. They have a higher level of parallelism than parallel processors, with great potential and flexibility. Using a large number of cheap microcomputers to form a system through the interconnection network to obtain high performance is a direction of research on multiprocessors and multicomputer systems. Multiprocessors and multicomputer systems require the study of parallel algorithms at a higher level (processes). High-level programming languages provide means for concurrent and synchronizing processes. The operating system is also very complex, and it is necessary to solve the communication and synchronization of multiple processes between multiple computers. , Control and other issues.

Distributed system is the development of multi-machine system. It is a system that is physically distributed by multiple independent and interacting single machines to solve user problems together. Its system software is more complex (see Distributed Traditional Computer system).

Modern mainframes are almost all multi-computer systems with distributed functions. In addition to containing high-speed central processing units, there are input and output processors (or front-end user computers) that manage input and output, manage remote terminals, and network communications. The communication control processor, the maintenance and diagnosis machine for system-wide maintenance and diagnosis, and the database processor for database management. This is a low-level form of the distributed system.

Multiple geographically distributed traditional computer systems are connected to each other through communication lines and network protocols to form a traditional computer network. It is divided into local (local) traditional computer network and remote traditional computer network according to the geographical distance. Traditional computers on the network can share information resources and software and hardware resources with each other. Ticket booking systems and information retrieval systems are examples of traditional computer network applications.

⑤ Neumann machine and non-Neumann machine: The Neumann machine driven by stored programs and instructions still dominates so far. It executes instructions sequentially, which limits the parallelism inherent in the problem to be solved and affects the further improvement of processing speed. The non-Neumann machine that breaks through this principle is to develop parallelism from the architecture and improve system throughput. Research work in this area is ongoing. Data flow traditional computers driven by data flow and highly parallel traditional computers driven by reduction control and on demand are all promising non-Neumann traditional computer systems.

The computer of the future should be a quantum computer

If we continue to use the current chip, 15 years later, the development of traditional computers will come to an end. At the academician salon held by the Academician Center of the Chinese Academy of Engineering in Shanghai yesterday, the academicians predicted that 10-15 years from now will be the "dead limit" for the development of traditional traditional computers. The academicians called on my country to speed up the development of quantum traditional computers.