Backgroundknowledge

TheID3algorithmwasfirstproposedbyJ.RossQuinlanattheUniversityofSydneyin1975asaclassificationpredictionalgorithm.Thecoreofthealgorithmis"informationentropy"..TheID3algorithmcalculatestheinformationgainofeachattributeandconsidersthattheattributewithhighinformationgainisagoodattribute.Eachtimetheattributewiththehighestinformationgainisselectedasthepartitionstandard,thisprocessisrepeateduntiladecisiontreethatcanperfectlyclassifytrainingexamplesisgenerated.

Decisiontreeistoclassifydatatoachievethepurposeofprediction.Thedecisiontreemethodfirstformsadecisiontreebasedonthetrainingsetdata.Ifthetreecannotgivethecorrectclassificationtoallobjects,selectsomeexceptionstoaddtothetrainingsetdata,andrepeattheprocessuntilthecorrectdecisionsetisformed.Thedecisiontreerepresentsthetreestructureofthedecisionset.

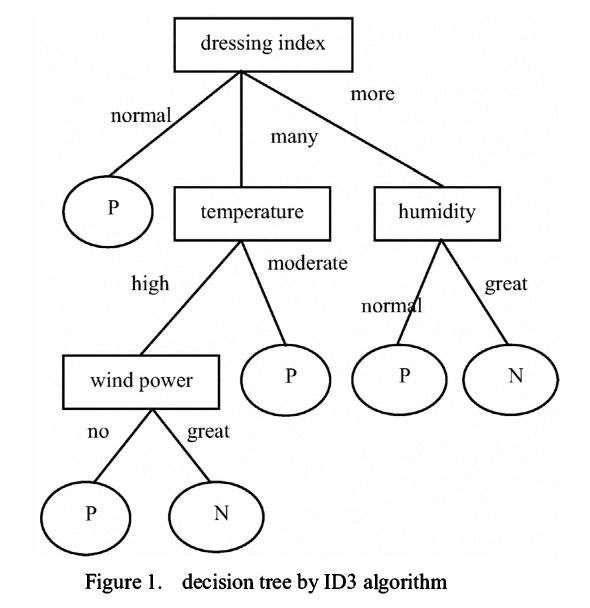

Thedecisiontreeiscomposedofdecisionnodes,branchesandleaves.Thetopnodeinthedecisiontreeistherootnode,andeachbranchisanewdecisionnode,oraleafofthetree.Eachdecisionnoderepresentsaproblemordecision,andusuallycorrespondstotheattributesoftheobjecttobeclassified.Eachleafnoderepresentsapossibleclassificationresult.Intheprocessoftraversingthedecisiontreefromtoptobottom,eachnodewillencounteratest,andthedifferenttestoutputsoftheproblemoneachnodewillleadtodifferentbranches,andfinallyaleafnodewillbereached.ThisprocessItistheprocessofusingdecisiontreestoclassify,usingseveralvariablestodeterminethecategoryitbelongsto.

ID3algorithm

TheID3algorithmwasfirstproposedbyQuinlan.Thealgorithmisbasedoninformationtheory,andusesinformationentropyandinformationgainasmeasurementstandards,soastorealizetheinductiveclassificationofdata.Thefollowingaresomebasicconceptsofinformationtheory:

Definition1:Iftherearenmessageswiththesameprobability,theprobabilitypofeachmessageis1/n,andtheamountofinformationtransmittedbyamessageis-Log2(1/n)

Definition2:IftherearenmessagesandthegivenprobabilitydistributionisP=(p1,p2...pn),thentheamountofinformationtransmittedbythedistributioniscalledtheentropyofP.For

.

Definition3:IfarecordsetTisdividedintoindependentcategoriesC1C2..Ckaccordingtothevalueofthecategoryattribute,theamountofinformationneededtoidentifywhichcategoryanelementofTbelongstoisInfo(T)=I(p),wherePistheprobabilitydistributionofC1C2...Ck,thatis,P=(|C1|/|T|,.....|Ck|/|T|)

Definition4:IfWefirstdivideTintosetsT1,T2...Tnaccordingtothevalueofthenon-categoryattributeX,andthendeterminetheamountofinformationofanelementclassinTcanbeobtainedbydeterminingtheweightedaveragevalueofTi,thatis,theweightedaveragevalueofInfo(Ti)is:

Info(X,T)=(i=1tonsum)((|Ti|/|T|)Info(Ti))

Definition5:InformationGainisthedifferencebetweentwoamountsofinformation.OneamountofinformationistheamountofinformationofoneelementofTthatneedstobedetermined,andtheotheramountofinformationistheamountofinformationofoneelementofTthatneedstobedeterminedafterthevalueofattributeXhasbeenobtained.Theinformationgaindegreeformulais:

Gain(X,T)=Info(T)-Info(X,T)

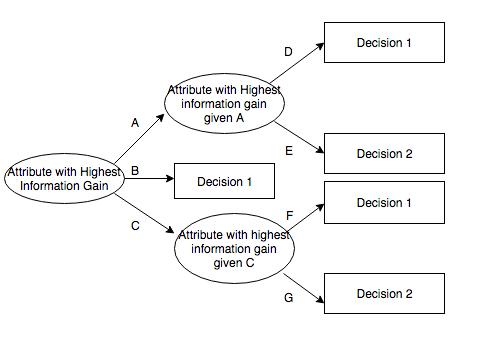

ID3algorithmcalculatestheinformationgainofeachattribute,Andselecttheattributewiththehighestgainasthetestattributeofthegivenset.Createanodefortheselectedtestattribute,markitwiththeattributeofthenode,createabranchforeachvalueoftheattributeanddividethesampleaccordingly.

Datadescription

Thesampledatausedhascertainrequirements.ID3is:

Description-attribute-attributeswiththesamevaluemustdescribeeachexampleandhaveafixednumberofvalues.

Pre-definedclass-instanceattributesmusthavebeendefined,thatis,theyarenotlearnedID3.

Discreteclass-theclassmustbesharpanddistinct.Thedecompositionofcontinuousclassesintofuzzycategories(suchasmetalsbeing"hard,difficult,flexible,gentle,andsoft"isnotcredible.

Enoughexamples-becauseinductivegeneralizationisusedfor(Thatis,itisnotpossibletofindout.)Enoughtestcasesmustbeselectedtodistinguishvalidpatternsandeliminatetheinfluenceofspecialcoincidencefactors.

Attributeselection

ID3determineswhichattributesarebest.Astatisticalfeature,calledinformationgain,usesentropytoobtainagivenattributetomeasurethetrainingexamplesbroughtintothetargetclass.Theinformationwiththehighestinformationgain(informationisthemostusefulcategory)isselected.Inordertoclarifythegain,wefirstborrowfrominformationtheoryOnedefinitioniscalledentropy.Everyattributehasanentropy.